Introduction

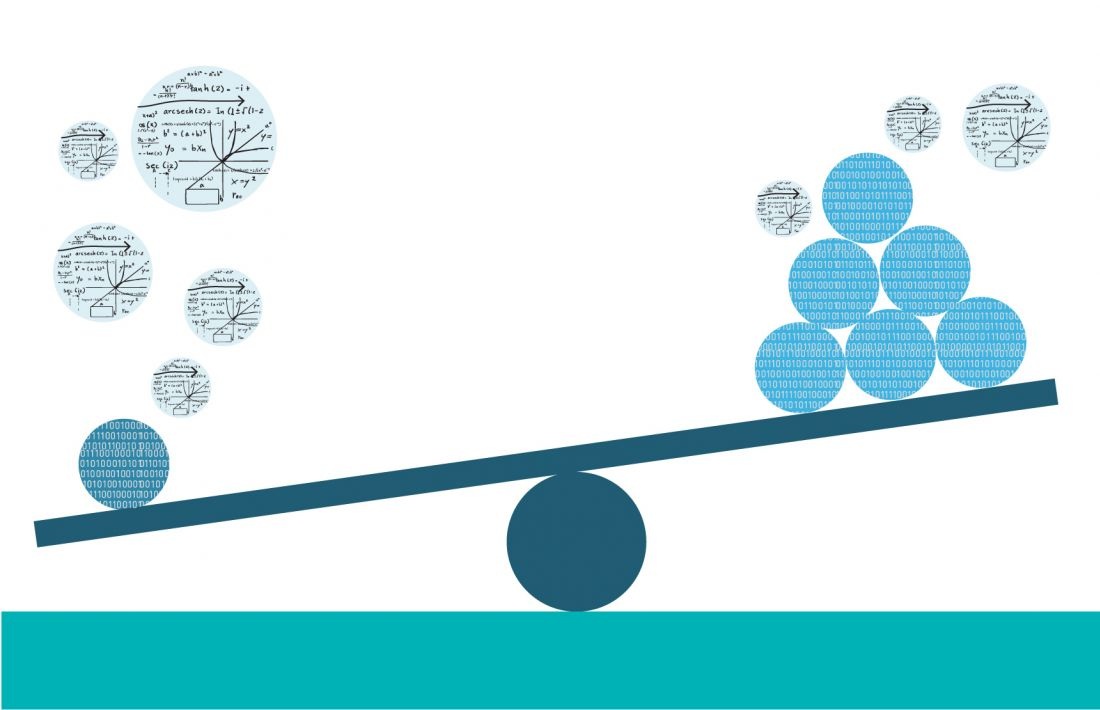

In machine learning, dealing with imbalanced datasets is a common challenge that can significantly impact the performance of predictive models. Imbalanced datasets occur when the classes in the target variable are not equally represented, making it difficult for algorithms to accurately predict minority classes. Advanced techniques have been developed to address this challenge, enabling practitioners to build robust and fair models even with skewed data distributions. Enrolling in a Data Science Course can equip professionals with the necessary skills to effectively tackle such challenges.

Understanding the Problem of Imbalanced Datasets

An imbalanced dataset typically has a dominant class (majority class) and an underrepresented class (minority class). In binary classification problems, for instance, an imbalanced dataset might have 95% of instances belonging to one class and only 5% to the other. Without proper handling, machine learning models tend to focus on the majority class, leading to high accuracy but poor performance in predicting the minority class.

The consequences of ignoring class imbalance can be severe, particularly in applications where the minority class is critical, such as detecting fraudulent transactions, diagnosing rare diseases, or identifying defective products in manufacturing.

Evaluating Models with Imbalanced Data

Traditional evaluation metrics, such as accuracy, are often misleading in the context of imbalanced datasets. For example, a model that predicts only the majority class could achieve 95% accuracy in a highly imbalanced dataset but fail entirely to predict the minority class. Instead, practitioners use the following metrics:

- Precision and Recall: Precision measures the proportion of true positive predictions among all positive predictions, while recall measures the proportion of true positives identified out of all actual positives.

- F1-Score: The harmonic mean of precision and recall, providing a balanced metric even when classes are imbalanced.

- ROC-AUC and PR-AUC: The Receiver Operating Characteristic Area Under Curve (ROC-AUC) and Precision-Recall Area Under Curve (PR-AUC) are useful for evaluating models under imbalanced conditions.

Understanding these metrics is crucial, and many Data Science Courses include modules that cover them in detail, enabling learners to make informed decisions when evaluating model performance.

Advanced Techniques for Handling Imbalanced Datasets

Following is a brief description of some of the advanced techniques for handling imbalanced datasets.

Data-Level Techniques

These methods modify the dataset to reduce imbalance and ensure the model receives balanced training data.

- Oversampling the Minority Class: Techniques such as Synthetic Minority Oversampling Technique (SMOTE) generate synthetic samples for the minority class. SMOTE creates new instances by interpolating between existing minority samples, effectively increasing the representation of the minority class.

- Undersampling the Majority Class: In this approach, random samples from the majority class are removed to match the size of the minority class. However, this can lead to loss of important information.

- Hybrid Sampling: Combining oversampling and undersampling techniques can balance classes without compromising data integrity.

- Data Augmentation: For image or text data, augmentation techniques such as rotation, flipping, or paraphrasing can increase the diversity of minority class examples.

Algorithm-Level Techniques

These methods modify algorithms to handle class imbalance more effectively.

- Class Weights: Many machine learning algorithms, such as logistic regression, support vector machines, and decision trees, allow assigning higher weights to minority class samples during training. This ensures that the model pays more attention to the underrepresented class.

- Cost-Sensitive Learning: Cost-sensitive algorithms incorporate the misclassification costs directly into the learning process, penalising the model more heavily for misclassifying minority class instances.

Ensemble Techniques:

- Balanced Bagging: Combines bagging with resampling to ensure balanced subsets for training.

- Balanced Random Forests: Adjusts random forests to balance the class distribution in each tree.

Feature Engineering

Feature selection and engineering play a crucial role in addressing imbalance. Identifying and focusing on features that are more informative for the minority class can improve model performance. Domain expertise is often critical in this process. A career-oriented data course, for instance a Data Science Course in Mumbai that is tailored for professionals, can provide practical exercises on feature engineering techniques tailored for imbalanced datasets.

Anomaly Detection Techniques

In some cases, imbalanced classification can be reframed as an anomaly detection problem, where the minority class is treated as an anomaly. Algorithms like Isolation Forests or One-Class SVMs are effective for such scenarios.

Advanced Deep Learning Techniques

- Class Imbalance in Neural Networks: Deep learning models, like convolutional neural networks (CNNs) and recurrent neural networks (RNNs), can handle imbalanced data with techniques such as focal loss, which down-weights the loss for well-classified examples and emphasises the minority class.

- GANs for Data Augmentation: Generative Adversarial Networks (GANs) can generate realistic samples for the minority class, improving the diversity and representation of imbalanced datasets.

Threshold Adjustment

Adjusting the decision threshold of the classifier can help balance precision and recall. Instead of using the default threshold (0.5 in binary classification), it can be tuned to favour the minority class based on the application’s requirements.

Best Practices for Handling Imbalanced Data

- Analyse the Data: Understand the class distribution, identify potential biases, and explore the features that contribute to class differentiation.

- Choose the Right Metric: Select evaluation metrics that emphasise the performance of the minority class, such as F1-score or PR-AUC.

- Experiment with Multiple Techniques: There is no one-size-fits-all solution. Experiment with different resampling methods, algorithms, and hyperparameters to find the best approach for your data.

- Validate with Stratified Sampling: Use stratified sampling during cross-validation to ensure that each fold maintains the original class distribution.

- Monitor Overfitting: Oversampling or using complex models may lead to overfitting. Regularisation techniques and careful evaluation can mitigate this risk. Professionals can gain in-depth exposure to these approaches by enrolling in a well-rounded data course in a reputed learning centre; for instance, a Data Science Course in Mumbai that is focused on advanced machine learning techniques.

Challenges and Future Directions

While advanced techniques have made significant progress in addressing imbalanced datasets, challenges remain:

- Bias in Synthetic Data: Oversampling techniques like SMOTE can introduce noise or bias if not applied carefully.

- Scalability: Handling large-scale imbalanced datasets with high-dimensional features remains computationally intensive.

- Imbalanced Multi-Class Problems: Multi-class imbalances introduce additional complexity, requiring specialised strategies.

Future research is exploring adaptive sampling methods, meta-learning frameworks, and automated tools that dynamically adjust to class imbalance during training.

Conclusion

Handling imbalanced datasets is essential for building reliable and fair machine learning models. Advanced techniques such as resampling, cost-sensitive learning, and algorithm modifications provide powerful tools to address this challenge. By selecting the appropriate methods and combining them with robust evaluation metrics, practitioners can ensure that their models perform effectively, even in the face of class imbalance.

A Data Science Course not only introduces these concepts but also offers practical experience with real-world datasets, preparing learners to excel in tackling imbalanced data challenges. Mastering these techniques is vital for data scientists aiming to build impactful and accurate predictive models in diverse applications.

Business Name: ExcelR- Data Science, Data Analytics, Business Analyst Course Training Mumbai

Address: Unit no. 302, 03rd Floor, Ashok Premises, Old Nagardas Rd, Nicolas Wadi Rd, Mogra Village, Gundavali Gaothan, Andheri E, Mumbai, Maharashtra 400069, Phone: 09108238354, Email: enquiry@excelr.com.

+ There are no comments

Add yours